Thanks JEDelta for that effort. That is alot of work, and expense too, so it is appreciated.

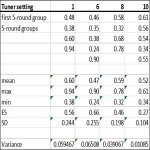

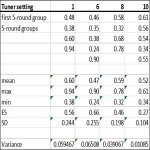

I am not a stats guy, and I just mess around with stats. (For serious work I would hire a stats professional). On first glance at the resulting means (averages) of your replicates of your 5-shot groups for tuner settings 1,6,8,10, I suspected that these means might not be statistically "significantly different", i.e. the range of means from 0.47 to 0.60, given the size of the ES and SD's (see augmented table below) indicates that there is alot of overlap of distributions.

Excel has a handy ANOVA tool to test for significant difference in the means of multiple sample groups.

(For help on ANOVA, this video shows a simple example how-to in Excel, including how to add-in the stats analysis tools that are in Excel:

https://www.youtube.com/watch?v=ZvfO7-J5u34

We can use this to test our null hypothesis that the tuner has no effect on the mean group size. The alternative hypothesis is that the means are significantly different and therefore the tuner does cause a significant difference in the groups. But to accept the alternative hypothesis we must first falsify the null hypothesis.

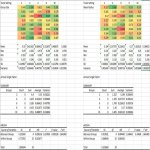

Table 1 below is the copied data for the 5-shot group replicates for tuner settings 1,6,8,10, with added ES, SD and Variance:

CAVEAT: By rules of statistics, the sample sizes here per tuner setting are not large enough for a robust test. Technically we cannot know if these means are really different or not. For a strong confident test, we would need a minimum of 30 replicates per tuner setting. I know, I know, that is a huge effort and expense!

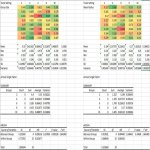

The standard significance threshold is P <= 0.05, so I used that in the test.

Table 2 below is the ANOVA output from Excel:

The P value = 0.74. That is huge, not <= 0.05. This indicates that the tuner setting means (averages) are not significantly different. Therefore we cannot reject the null hypothesis. i.e.: The mean group sizes are not significantly different.

HOWEVER.....statistics can lie, or mislead. (Mainly because the sample sizes are too small, or the wrong test for the given assumptions is chosen).

When we look at tuner setting 10 we can see what looks like a much smaller ES and SD. The group mean of tuner setting 6 (0.47) is smaller than tuner setting 10 (0.52). But setting 10's ES is more than twice as small as setting 6's ES. Based on the ES, I would chose tuner setting 10 in a match. In a match for score, I would much rather have that small 0.27 ES working in my favour.

But that said, more replicates of these settings could change the data and difference significantly.

Rimfire stats are slippery things, and everything changes with a new lot of ammo!